Web scraping has become an essential component of modern data-driven applications, enabling businesses to extract valuable information from websites for competitive analysis, market research, and business intelligence. However, managing large-scale scraping operations presents significant challenges, particularly when dealing with multiple websites, rate limiting, and resource management. This is where Celery, a powerful distributed task queue system, emerges as a game-changing solution for orchestrating complex scraping workflows.

Understanding Celery’s Role in Web Scraping Architecture

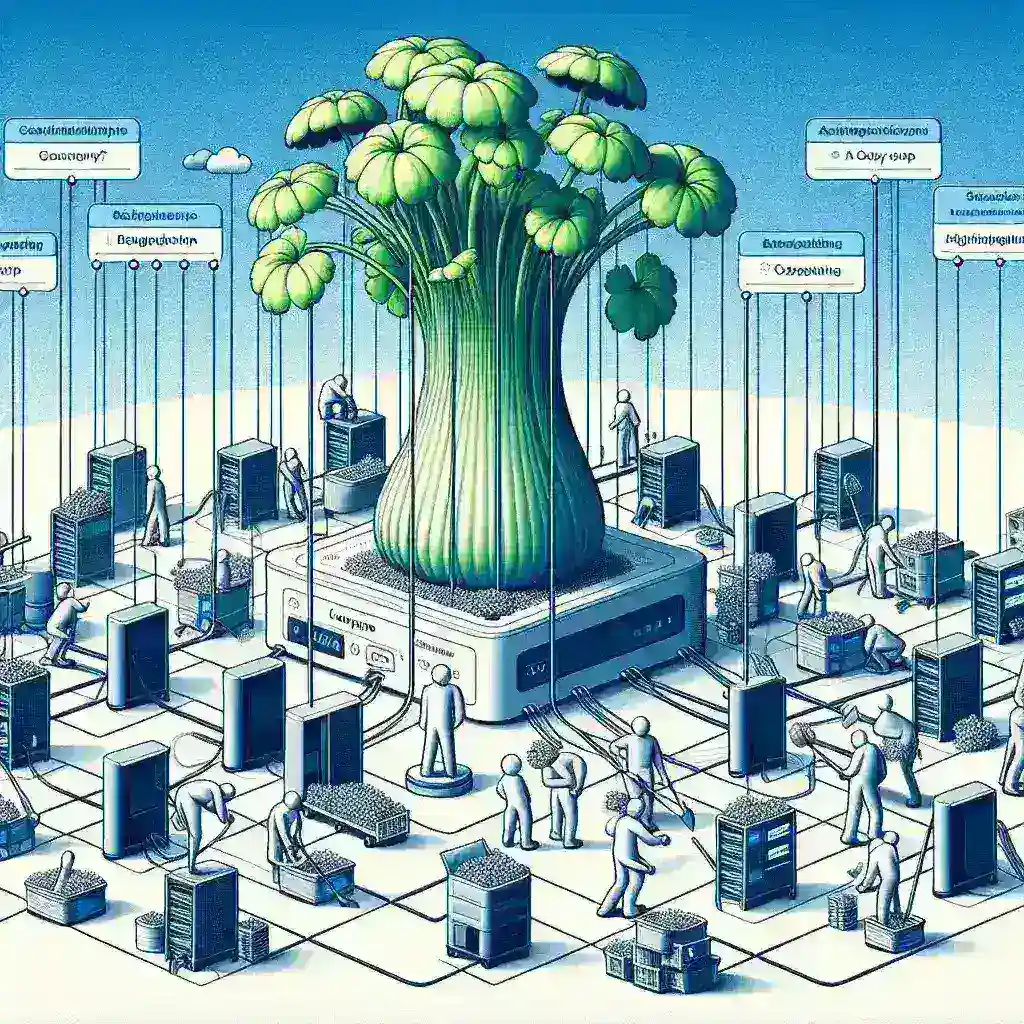

Celery operates as an asynchronous task queue that enables developers to distribute workloads across multiple worker processes or machines. In the context of web scraping, this architecture provides several critical advantages that transform how we approach data extraction challenges. The system’s ability to handle concurrent tasks while maintaining resource efficiency makes it particularly well-suited for scraping operations that require both speed and reliability.

The fundamental principle behind using Celery for scraping tasks lies in its ability to decouple task creation from task execution. This separation allows developers to queue scraping jobs without blocking the main application thread, ensuring that user interfaces remain responsive while background processes handle data extraction. Furthermore, Celery’s built-in retry mechanisms and error handling capabilities provide robust solutions for dealing with common scraping challenges such as network timeouts, server errors, and temporary website unavailability.

Setting Up Celery for Scraping Operations

Implementing Celery for scraping tasks begins with establishing a proper infrastructure that includes a message broker, worker processes, and monitoring capabilities. The most common setup involves using Redis or RabbitMQ as the message broker, which serves as the communication layer between task producers and consumers. This configuration ensures reliable message delivery and provides persistence for queued tasks, preventing data loss during system restarts or failures.

The worker configuration plays a crucial role in optimizing scraping performance. Developers can configure multiple worker processes to handle different types of scraping tasks, such as lightweight data extraction jobs and resource-intensive operations that require browser automation. This segmentation allows for better resource allocation and prevents heavy tasks from blocking lighter operations.

Essential Components for Celery-Based Scraping

- Task Definition: Creating well-structured Celery tasks that encapsulate scraping logic

- Rate Limiting: Implementing respectful scraping practices through built-in rate limiting features

- Error Handling: Establishing robust retry mechanisms and failure management strategies

- Monitoring: Setting up comprehensive logging and task monitoring systems

- Scaling: Configuring horizontal scaling capabilities for handling increased workloads

Advanced Task Management Strategies

Effective scraping task management extends beyond basic queue operations to encompass sophisticated scheduling, prioritization, and resource optimization techniques. Celery’s routing capabilities enable developers to direct specific types of scraping tasks to specialized worker pools, ensuring that high-priority operations receive appropriate resources while maintaining overall system efficiency.

One particularly powerful feature is Celery’s ability to implement custom task routing based on various criteria such as target website, data type, or urgency level. This granular control allows for the creation of dedicated worker pools for different scraping scenarios, such as real-time data extraction for financial markets versus batch processing for product catalog updates.

Implementing Smart Retry Logic

Intelligent retry mechanisms represent a critical aspect of robust scraping systems. Celery provides extensive options for customizing retry behavior, including exponential backoff strategies, maximum retry limits, and conditional retry logic based on specific error types. These features enable scraping systems to gracefully handle temporary failures while avoiding unnecessary resource consumption on permanently failed tasks.

The implementation of custom retry logic should consider factors such as the target website’s response patterns, network conditions, and the criticality of the data being extracted. For instance, e-commerce price monitoring tasks might require aggressive retry strategies with short intervals, while historical data collection operations can afford longer retry delays.

Optimizing Performance and Resource Management

Performance optimization in Celery-based scraping systems involves careful consideration of concurrency levels, memory usage, and network resource allocation. The system’s ability to dynamically adjust worker pools based on queue depth and system load provides automatic scaling capabilities that ensure optimal resource utilization across varying workload conditions.

Memory management becomes particularly important when dealing with large-scale scraping operations that process substantial amounts of data. Implementing proper data serialization strategies and utilizing Celery’s result backend efficiently can prevent memory leaks and ensure stable long-term operation. Additionally, configuring appropriate task timeouts and worker lifecycle management helps maintain system stability under heavy loads.

Database Integration and Data Pipeline Management

Integrating Celery scraping tasks with database systems requires careful consideration of connection pooling, transaction management, and data consistency. The asynchronous nature of Celery tasks necessitates robust database handling strategies that prevent connection exhaustion and ensure data integrity across concurrent operations.

Effective data pipeline management involves implementing proper data validation, transformation, and storage workflows within Celery tasks. This includes establishing clear data schemas, implementing duplicate detection mechanisms, and ensuring proper error handling for database operations. The use of database transactions and proper exception handling prevents partial data corruption and maintains data quality standards.

Monitoring and Debugging Scraping Workflows

Comprehensive monitoring capabilities are essential for maintaining reliable scraping operations at scale. Celery provides extensive logging and monitoring features that enable developers to track task execution, identify performance bottlenecks, and diagnose system issues. Integration with monitoring tools such as Flower, Prometheus, or custom dashboard solutions provides real-time visibility into scraping system health and performance metrics.

Effective debugging strategies for Celery-based scraping systems involve implementing structured logging, task state tracking, and comprehensive error reporting. These capabilities enable rapid identification and resolution of issues ranging from network connectivity problems to website structure changes that affect scraping logic.

Security Considerations and Best Practices

Security considerations for Celery scraping systems encompass multiple layers, including network security, data protection, and access control. Implementing proper authentication mechanisms for Celery brokers and result backends prevents unauthorized access to scraping infrastructure and sensitive data. Additionally, securing communication channels between system components through encryption and secure protocols ensures data integrity and confidentiality.

Best practices for secure scraping operations include implementing proper user agent rotation, respecting robots.txt files, and maintaining reasonable request rates to avoid overwhelming target servers. These practices not only ensure ethical scraping behavior but also reduce the risk of IP blocking and legal complications.

Scaling Considerations and Future-Proofing

As scraping requirements grow, Celery’s horizontal scaling capabilities provide a clear path for expanding system capacity. The ability to add worker nodes across multiple machines enables linear scaling of scraping capacity while maintaining centralized task management and monitoring. This architecture supports the evolution from small-scale data extraction operations to enterprise-level scraping infrastructures.

Future-proofing Celery scraping systems involves designing modular task structures that can accommodate changing requirements and evolving website technologies. Implementing plugin architectures and maintaining clear separation between scraping logic and infrastructure components ensures that systems can adapt to new challenges without requiring complete architectural overhauls.

Real-World Implementation Examples

Practical implementation of Celery for scraping tasks varies significantly based on specific use cases and requirements. E-commerce price monitoring systems typically require high-frequency scraping with real-time processing capabilities, while research data collection projects may prioritize comprehensive coverage over speed. Understanding these different requirements enables the design of optimized Celery configurations that deliver maximum value for specific applications.

Success stories from various industries demonstrate the transformative impact of well-implemented Celery scraping systems. From financial services companies monitoring market data to retail businesses tracking competitor pricing, the combination of Celery’s robust task management capabilities with effective scraping strategies delivers significant competitive advantages and operational efficiencies.

The integration of Celery’s comprehensive documentation and community resources provides ongoing support for developers implementing and maintaining scraping systems. This ecosystem ensures access to best practices, troubleshooting guidance, and continuous improvements that keep scraping operations at the forefront of technological advancement.

Conclusion: Maximizing Scraping Efficiency with Celery

The strategic implementation of Celery for managing scraping tasks represents a fundamental shift toward more scalable, reliable, and maintainable data extraction systems. By leveraging Celery’s distributed architecture, robust error handling, and comprehensive monitoring capabilities, organizations can build scraping infrastructures that deliver consistent results while adapting to changing requirements and scaling challenges.

The journey toward mastering Celery-based scraping systems requires careful attention to architecture design, performance optimization, and operational best practices. However, the investment in properly structured Celery implementations pays dividends through improved reliability, enhanced scalability, and reduced operational overhead. As web scraping continues to evolve as a critical business capability, Celery provides the foundation for building future-ready data extraction systems that can meet the demands of modern data-driven organizations.